It’s been a while since I’ve written anything in this blog. I’ll admit that I started it back in 2020 as a tool to help get me interviews for Data Scientist roles. And it worked. But now I’ve been working as a Data Scientist for long enough to share my thoughts on the industry, and to use this and other forums to contemplate what the future holds and to clarify some positive steps towards it.

Since 2021, I’ve been working as a Data Scientist: briefly at Coefficient, and then at BT Group, before my current role as as Senior Data Scientist at HM Land Registry. So over the past three years, I’ve been fortunate to gain fairly varied data science experience. Add to this my previous experience – in climate finance, policy research and writing, and development economics (not to mention stints as a wine maker, sommelier, and teacher) – and I have a fair bit to share.

I worked with the Consumer arm of BT Group to personalise the experience enjoyed by each customer across their range of interactions with the company. This spanned both BT and EE brands across both mobile and broadband. My work pushed beyond the traditional marketing applications of machine learning – like churn and propensity models – with innovative new techniques for: profiling customers with Nonlinear Dimensionality Reduction and Clustering; and predicting the next best action of customer facing apps, websites, and communications using Reinforcement Learning (RL).

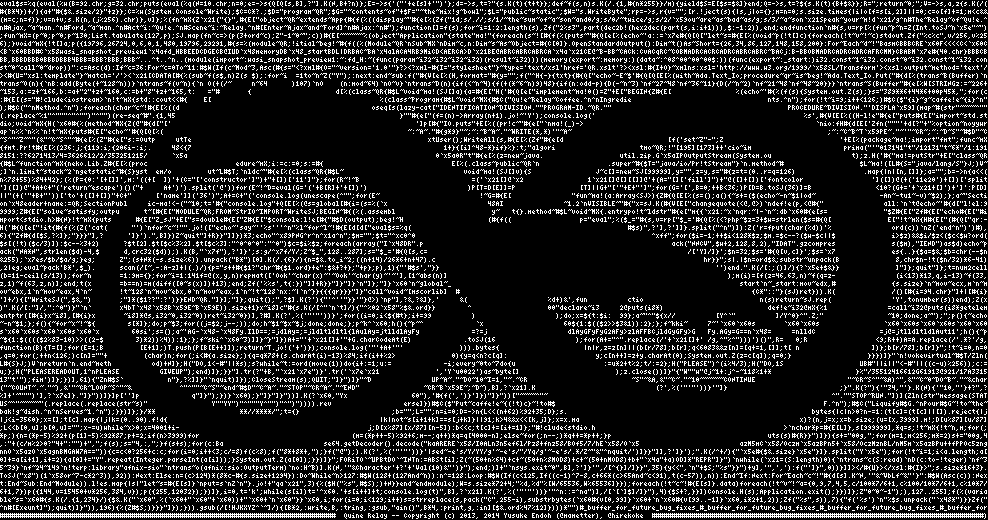

At HM Land Registry, my work to date has spanned two areas: developing our Machine Learning Operations (ML Ops) platform; and data extraction from printed documents using Computer Vision (CV), Optical Character Recognition (OCR), and Natural Language Processing (NLP). Currently, I’m leveraging Retrieval Augmented Generation (RAG) to create an internal Large Language Model (LLM) that provides human like responses to internal queries on Practice Guidance.

There are some key difference between these two experiences, crucially: at BT Group, I was focused on structured data generally stored in relational databases, and thus on a toolset of more traditional machine learning tools; while at HM Land Registry I’m focused on unstructured data like images and text, and thus depend more on frameworks built on deep learning foundations.

But there are a few factors in common which may not be immediately obvious to anybody looking from the outside, and which may be useful to keep in mind when considering a Data Scientist role in a large organisation:

- While machine learning technology accelerates ever faster, there are ever more risks requiring large organisations to develop policies by committee, so change can be gradual;

- But decisions can also be made suddenly, so don’t lose heart and imagine you’re stuck in a bureaucratic rut forever;

- Machine learning frameworks and software stacks are somewhat interchangeable, so get started with whatever interests you, and document your key findings and revelations as you go; and

- Even more than other professional fields, data science is about learning by doing, so dive right into whatever interests you and start experimenting.